Camera removes reflections from photos taken through windows

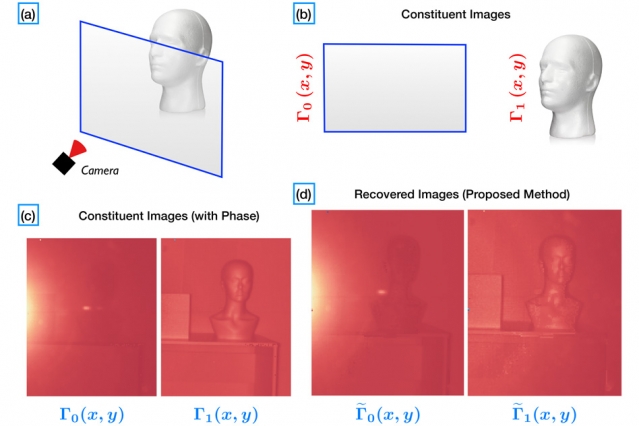

Taking a picture through glass produces nasty reflections that damage the photograph. Researchers from MIT’s Institute of Electrical and Electronics Engineers’ International Conference on Acoustics, Speech, and Signal Processing Camera Culture Group have come up with a system that shoots light into a scene and gauges the differences between the arrival times of light reflected by nearby objects in order to remove these reflections.

The Camera Culture Group has already been working to measure the arrival times of reflected light by using an ultrafast sensor called a streak camera, but its new system leverages an inexpensive depth sensor found in video game systems — Microsoft Kinect.

According to the team it’s impossible to make a camera that can pick out multiple reflections because the camera would need to operate at the speed of light in order to do so. To overcome this, Ayush Bhandari, a PhD student in the MIT Media Lab said they needed to employ the Fourier transform.

The Fourier transform is a method for decomposing a signal into its constituent frequencies. Any changes in light that hit the sensor can be represented as an erratic up-and-down squiggle. The Fourier transform re-describes them as the sum of multiple, very regular squiggles, or pure frequencies.

Each frequency in a Fourier method is characterized amplitude and phase.

If two light signals, the one reflected from a window and one from a more distant object (the photograph subject), arrive at a light sensor at slightly different times, their Fourier decompositions will have different phases. So measuring phase offers a way to measure the signals’ time of arrival.

Since a conventional light sensor can’t measure phase, only intensity, MIT needed to team up with Microsoft Research to develop a special camera that emits the light from only specific frequencies and gauges the intensity of the reflections.

This information, along with the number of different reflectors positioned between the camera and photograph subject, enabled the researchers’ algorithms to determine the phase of the returning light and separate out signals from different depths.

During testing, the researchers swept through 45 frequencies to enable near- perfect image separation in about a minute of exposure time.

“What is remarkable about this work is the mixture of advanced mathematical concepts, such as sampling theory and phase retrieval, with real engineering achievements,” said Laurent Daudet, a professor of physics at Paris Diderot University. “I particularly enjoyed the final experiment, where the authors used a modified consumer product — the Microsoft Kinect One camera — to produce the untangled images. For this challenging problem, everyone would think that you’d need expensive, research-grade, bulky lab equipment. This is a very elegant and inspiring line of work.”

Story via MIT.

Comments are closed, but trackbacks and pingbacks are open.