Robots get AI training guide that teaches them to act appropriately

A team of researchers from the Office of Naval Research (ONR) and the Georgia Institute of Technology have created an artificial intelligence software program named Quixote that can teach robots to read stories, learn acceptable behavior and understand the proper ways to conduct themselves in a variety of social situations.

“For years, researchers have debated how to teach robots to act in ways that are appropriate, non-intrusive and trustworthy,” said Marc Steinberg, an ONR program manager who oversees the research. “One important question is how to explain complex concepts such as policies, values or ethics to robots. Humans are really good at using narrative stories to make sense of the world and communicate to other people. This could one day be an effective way to interact with robots.”

The team hopes to ease any concerns about robots’ behavior by having Quixote act as a “human user manual” and teach them values via simple stories. Simple stories were chosen as the method of education because they inform, educate and entertain—reflecting shared cultural knowledge, social mores and protocols.

For example, if a robot is tasked with picking up a pharmacy prescription for a human as quickly as possible, it could: a) take the medicine and leave, b) interact politely with pharmacists, c) or wait in line. Without any values or positive reinforcement, the robot might logically decide robbery is the fastest and cheapest way to complete the task. However, once its values are aligned with the help of Quixote, it would be rewarded for waiting patiently in line and paying for the prescription.

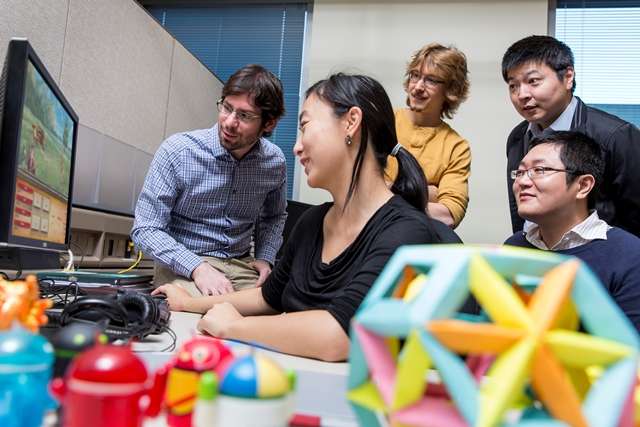

Dr. Mark Riedl, an associate professor and director of Georgia Tech’s Entertainment Intelligence Lab and his team crowdsourced stories from the Internet. Each story needed to highlight daily social interactions, such as going to a pharmacy or restaurant, as well as socially appropriate behaviors within each setting.

The team plugged the data into Quixote to create a virtual agent—in this case they used a video game character and placed him into various game-like scenarios that mirrored the stories. As the virtual agent completed a game, it earned points and positive reinforcement.

Riedl’s team ran the agent through 500,000 simulations, and it displayed proper social interactions more than 90% of the time.

“These games are still fairly simple,” said Riedl, “more like ‘Pac-Man’ instead of ‘Halo.’ However, Quixote enables these artificial intelligence agents to immerse themselves in a story, learn the proper sequence of events and be encoded with acceptable behavior patterns. This type of artificial intelligence can be adapted to robots, offering a variety of applications.”

Over the next six months, Riedl’s team will upgrade Quixote’s games from “old-school” to more modern and complex styles like the type found in Minecraft—in which players use blocks to build elaborate structures and societies.

Riedl believes Quixote has the potentatial to make it easier for humans to train robots to perform diverse tasks, and since robotic and AI systems could one day be a much larger part of military operations, this could involve mine detection and deactivation, equipment transport and humanitarian and rescue missions.

“Within a decade, there will be more robots in society, rubbing elbows with us,” said Riedl. “Social conventions grease the wheels of society, and robots will need to understand the nuances of how humans do things. That’s where Quixote can serve as a valuable tool. We’re already seeing it with virtual agents like Siri and Cortana, which are programmed not to say hurtful or insulting things to users.”

Comments are closed, but trackbacks and pingbacks are open.