Unmanned aerial vehicle technology company DJI has teamed up with FLIR Systems to equip its drones with thermal-imaging technology for aerial applications.

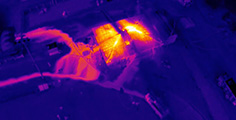

Doing so will allow DJI drones to provide different types of sensory data essential for firefighting, agriculture, inspection and other industrial-use cases. FLIR cameras reveal details that are invisible to the naked eye by making subtle differences in temperature visible, so DJI drones will be able to detect when equipment or buildings are damaged, or even the location of lost people.

“Almost every week we see new applications of our aerial technology,” said Frank Wang, DJI Founder and CEO. “Adding thermal imaging as an additional sensor options for aerial platforms will open up new AND innovative uses for our users, whether it’s gaining strategic insight into how their crops are growing or more efficiently understanding the spread of fires.”

Next year, DJI will release Zenmuse XT, which it calls “the world’s most-powerful aerial thermal-imaging camera.”

The Zenmuse XT will integrate a FLIR thermal-imager with gimbal stabilization and Lightbridge video-transmission technology. FLIR’s thermal camera can generate images up to a resolution of 640 x 512.

The Zenmuse XT can be controlled via DJI GO app to provide users with real-time data and a view of what the camera sees.

Features will include:

- Spot metering, temperature measurement at the mid-point

- Digital zoom

- Single or interval shooting modes

- Photo, video preview and download

- Take photos while recording video

- Various camera settings and parameters including:

- – Palette, also referred to as Look-up Table (LUT)

- – Scene, also referred to as Automatic Gain Correction (AGC)

- – Region of Interest (ROI)

- – Isotherm mode

Learn more at DJI.