Magnifying smartphone screen app developed for visually impaired

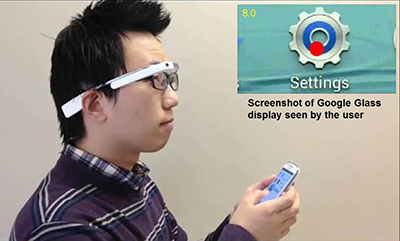

Have a hard time reading and navigating on your smartphone screen? About 1.5 million Americans over the age of 45 suffer from low vision and now researchers from the Schepens Eye Research Institute of Massachusetts Eye and Ear/Harvard Medical School have developed a smartphone app that projects a magnified smartphone screen to Google Glass so that visually impaired users can navigate using head movements to view a corresponding portion of the magnified screen.

In demonstrations, they have shown that the technology can benefit low-vision users, even those who feel that a smartphone’s built-in zoom feature is not helpful.

“When people with low visual acuity zoom in on their smartphones, they see only a small portion of the screen, and it’s difficult for them to navigate around — they don’t know whether the current position is in the center of the screen or in the corner of the screen,” said senior author Gang Luo, Ph.D., associate scientist at Schepens Eye Research Institute of Mass. Eye and Ear and an associate professor of ophthalmology at Harvard Medical School. “This application transfers the image of smartphone screens to Google Glass and allows users to control the portion of the screen they see by moving their heads to scan, which gives them a very good sense of orientation.”

According to the researchers, magnification is considered the most effective method of compensating for visual loss. Their head-motion application overcomes the limitations of conventional smartphone screen zooming, which does not provide sufficient context and can be difficult to navigate.

In tests, the researchers showed that the head-based navigation method reduced the average trial time compared to conventional manual scrolling by about 28%.

Next, the team will incorporate more gestures on the Google Glass to interact with smartphones and eventually would like to study the effectiveness of head-motion based navigation compared to other commonly-used smartphone accessibility features like voice-based navigation.

“Given the current heightened interest in smart glasses, such as Microsoft’s Hololens and Epson’s Moverio, it is conceivable to think of a smart glass working independently without requiring a paired mobile device in near future.” said Shrinivas Pundlik, Ph.D, who worked on the research. “The concept of head-controlled screen navigation can be useful in such glasses even for people who are not visually impaired.”

For a demonstration, view the video below.

Story via Massachusetts Eye and Ear.

Comments are closed, but trackbacks and pingbacks are open.