Building large-scale quantum computers has always been a challenge. Packing millions of qubits into a single monolithic chip isn’t practical—errors increase, fidelity drops, and systems become unwieldy. Instead, researchers are increasingly turning to modular architectures, where smaller, high-quality quantum modules are linked together, much like snapping building blocks into place.

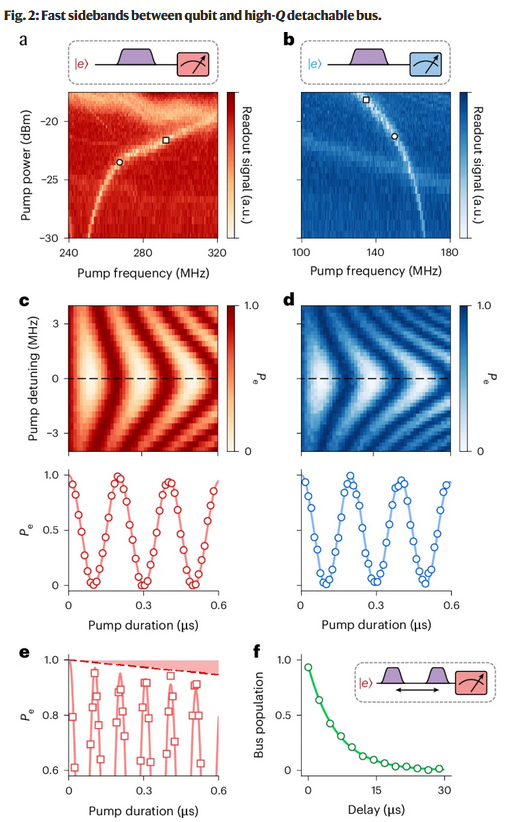

A team from the Grainger College of Engineering at the University of Illinois Urbana-Champaign has now demonstrated a promising path forward. In a study published in Nature Electronics, the group presented a high-performance modular design for superconducting quantum processors that could make scalable, reconfigurable, and fault-tolerant systems possible.

Instead of building one massive superconducting chip, the Illinois team connected separate devices using superconducting coaxial cables—effectively stitching qubits across modules. The approach delivered ~99% SWAP gate fidelity, meaning less than 1% error during qubit operations, a performance level good enough to justify scaling up.

For engineers, modularity offers three clear advantages:

-

Scalability: Add more modules instead of redesigning larger chips.

-

Upgradability: Swap out faulty or outdated hardware without scrapping an entire system.

-

Reconfigurability: Reassemble and reconnect modules to optimize performance or correct mistakes.

Wolfgang Pfaff, assistant professor of physics and senior author of the study, said the team’s engineering-driven approach aims to make modular quantum systems practical. “Can we connect devices, manipulate qubits jointly, and still maintain high quality—while also being able to take the system apart and put it back together? That’s the capability we need if quantum is going to scale.”

The next step for the researchers is pushing beyond two-device setups, exploring multi-module connections while maintaining error checks and fidelity.

For an industry where error correction and scalability remain the biggest roadblocks, the Illinois team’s results highlight how modular design could be the key to moving quantum computing from lab-scale demonstrations to real-world engineering systems.

Original Story: Building blocks and quantum computers: New research leans on modularity | Illinois Quantum Information Science and Technology Center | Illinois