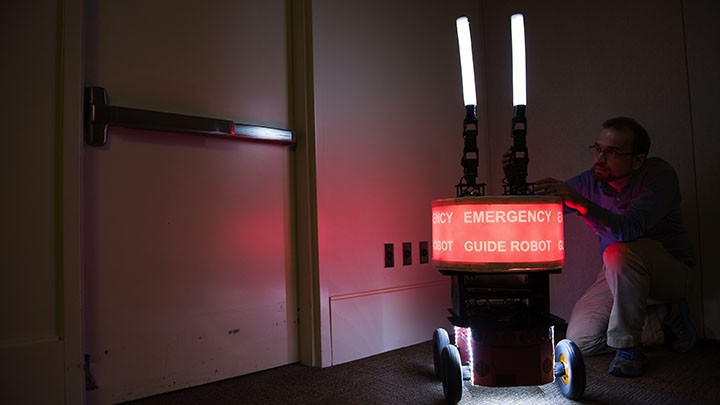

As more and more service and search and rescue robots are developed, Georgia Tech researchers wanted to gauge humans’ level of trust in the mechanic companions.

To do so, the team deployed its “Emergency Guide Robot” in a mock building fire, where test subjects followed instructions, even after the machine had proven itself unreliable —and after some participants were told that robot had broken down.

The robot was positioned within the emergency situation to help humans evacuate more efficiently, and the researchers were surprised to find that the test subjects followed the robot’s instructions even when it didn’t seem incredibly trustworthy.

“People seem to believe that these robotic systems know more about the world than they really do, and that they would never make mistakes or have any kind of fault,” said Alan Wagner, a senior research engineer in the Georgia Tech Research Institute (GTRI). “In our studies, test subjects followed the robot’s directions even to the point where it might have put them in danger had this been a real emergency.”

The robot was controlled by a researcher who was hidden in a different room. In some cases, the robot led the subjects into the wrong room and traveled around in a circle twice before entering its final destination. For several test subjects, the robot actually navigated to a conference room as the hallways around it filled up with smoke. Even after the robot proved untrustworthy, the subjects still took its advice when it directed them out of the room.

The only time that the humans questioned the robot was when it made obvious errors. In those cases, sometimes the humans still followed it anyway.

“We expected that if the robot had proven itself untrustworthy in guiding them to the conference room, that people wouldn’t follow it during the simulated emergency,” said Paul Robinette, a GTRI research engineer who conducted the study as part of his doctoral dissertation. “Instead, all of the volunteers followed the robot’s instructions, no matter how well it had performed previously. We absolutely didn’t expect this.”

To explain the behavior, the researchers assumed that the subjects looked at the robot like an authority figure, which is why they were more likely to trust it in the time pressure of an emergency.

The research was part of an overall study regarding how humans trust robots and the role that they play in society. The research is part of a long-term study of how humans trust robots, an important issue as robots play a greater role in society.

“Would people trust a hamburger-making robot to provide them with food?” he asked. “If a robot carried a sign saying it was a ‘child-care robot,’ would people leave their babies with it? Will people put their children into an autonomous vehicle and trust it to take them to grandma’s house? We don’t know why people trust or don’t trust machines,” said Robinette.

Comments are closed, but trackbacks and pingbacks are open.