Over the past few years we’ve seen a plethora of technological breakthroughs that can assist handicapped and disabled people. For example, bionic eyes, high-tech prosthetic limbs, and even brain software that lets people move their prosthetic limbs via thought.

Now, a team of researchers from the University of Nevada, Reno and the University of Arkansas, Little Rock are working on a hand-worn robotic device capable of helping millions of blind and visually impaired people navigate past obstacles and assist with pre-locating, pre-sensing and grasping objects.

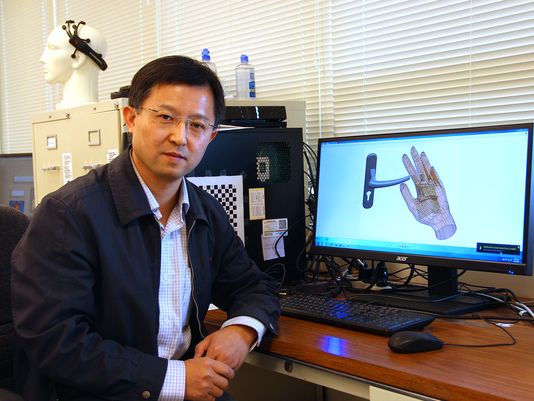

“The miniaturized system will contribute to the lives of visually impaired people by enabling them to identify and move objects, both for navigational purposes or for more simple things such as grasping a door handle or picking up a glass,” said Yantao Shen, assistant professor and lead researcher on the project from the University of Nevada, Reno’s College of Engineering. “We will pre-map the hand, and build a lightweight form-fitting device that attaches to the hand using key locations for cameras and mechanical and electrical sensors. It will be simpler than a glove, and less obtrusive.”

The team has just received an $82,000 grant from the National Institutes of Health’s National Eye Institute division to create the technology, which will combine vision, tactile, force, temperature and audio sensors and actuators to help the wearer pre-sense an object. The device will be able to tell the wearer the object’s location, feel its shape and size, and then grasp it.

“The visual sensors, very high resolution cameras, will first notify the wearer of the location and shape, and the proximity touch sensors kick in as the hand gets closer to the object,” said Shen. “The multiple sensors and touch actuators array will help to dynamically ‘describe’ the shape of the object to the hand when the hand is close to the object, allowing people with vision loss to have more independence and ability to navigate and to safely grasp and manipulate.”

Shen believes that the device will have a great impact on small and wearable robots that may see potential applications in space exploration, military surveillance, law enforcement and search and rescue.

Story via University of Reno, Nevada.

Comments are closed, but trackbacks and pingbacks are open.