Machine Learning Creates 3D Model From 2D Pictures

Researchers from the McKelvey School of Engineering at Washington University just developed a machine learning algorithm that creates a continuous 3D model of cells from a partial set of 2D images taken with standard microscopy tools found in many labs. They published their research on Sept. 16 in the journal Nature Machine Intelligence.

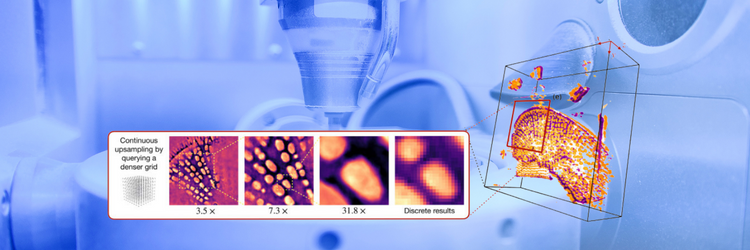

The team used a neural field network, a machine learning system that learns a mapping from spatial coordinates to the corresponding physical quantities. When complete, researchers can point to any coordinate, and the model provides the image value at that location. If there is a sufficient number of 2D images of the sample, the network can represent the subject in its entirety.

The image used to train the network is like any other microscopy image. Researchers light a cell from below; the light travels through it and is captured on the other side, creating an image.

The imaging system zooms in on a pixelated image and fills in the missing pieces for a complete 3D representation. The network recreates that structure. If the output is wrong, they tweak the network, while if correct, they reinforce the pathway. The model contains information of a complete, continuous representation of the cell — no need to save a data-heavy image file that the neural field network can recreate.