The field of 3D scanning hasn’t quite been as popular as 3D printing, but researchers from Brown University have developed an algorithm that may now help bring high-quality 3D scanning capability to off-the-shelf digital cameras and smartphones.

“One of the things my lab has been focusing on is getting 3-D image capture from relatively low-cost components,” said Gabriel Taubin, a professor in Brown’s School of Engineering. “The 3-D scanners on the market today are either very expensive, or are unable to do high-resolution image capture, so they can’t be used for applications where details are important.”

![This new technique eliminates the need for synchronization, which makes it possible to do structured light scanning with a smartphone. (Image Credit: Taubin Lab / Brown University)]()

- This new technique eliminates the need for synchronization, which makes it possible to do structured light scanning with a smartphone. (Image Credit: Taubin Lab / Brown University)

How a 3D scanner works

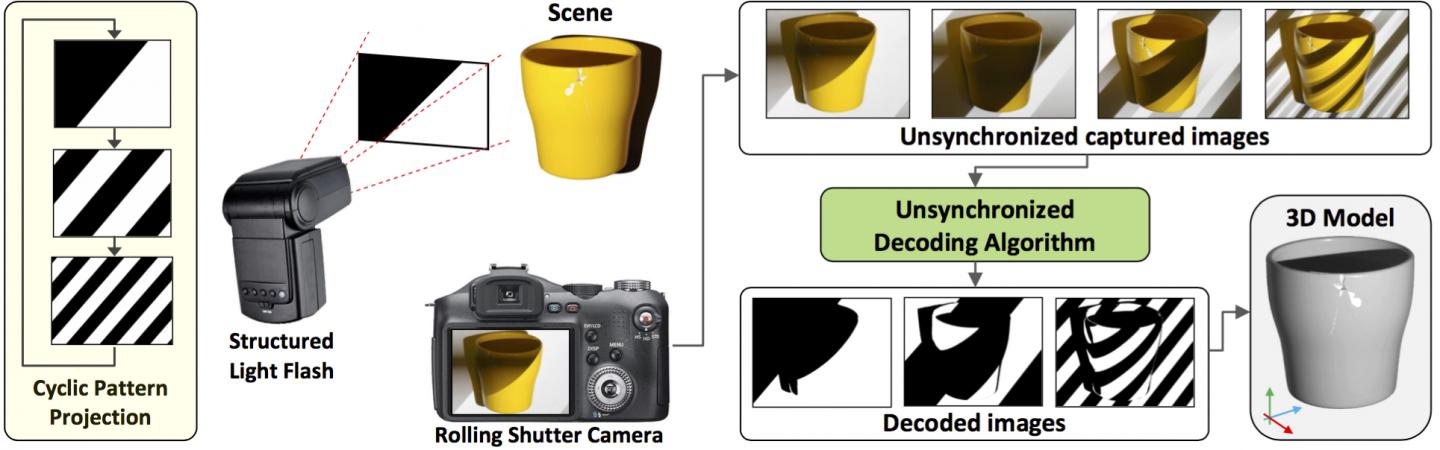

Most 3D scanners capture images using structured light, in which a projector casts a series of light patterns on an object, while a camera captures images of the object. The ways in which those patterns deform over and around an object can be used to put together the 3D image. In order for this to work, the projector and the camera need to be perfectly in sync — a task that requires costly hardware.

To solve this issue, Taubin and his students have developed an algorithm that enables the structured light technique to work without synchronization between projector and camera. Therefore, off-the-shelf cameras can be used with an untethered structured light flash. The only requirement is that the camera have the ability to capture uncompressed images in burst mode, which many cameras and smartphones have these days.

The challenge

The problem with devising such a method is that the projector could switch from one pattern to the next while the image is in the process of being exposed, which would result in a mixture of two or more patterns. Another encountered issue is that digital cameras use a rolling shutter mechanism. So, instead of capturing the whole image in one snapshot, cameras scan the field either vertically or horizontally, sending the image to the camera’s memory one pixel row at a time. This can also result in mixed patterns since the parts of the image are captured a slightly different times.

“That’s the main problem we’re dealing with,” said Daniel Moreno, a graduate student who led the development of the algorithm. “We can’t use an image that has a mixture of patterns. So with the algorithm, we can synthesize images–one for every pattern projected–as if we had a system in which the pattern and image capture were synchronized.”

How the algorithm works

Once the camera captures the image bursts, the algorithm calibrates the timing of the image sequence using the binary information embedded in the projected pattern. It then sifts through the images, pixel by pixel, to assemble a new sequence of images, capturing each one. Once the complete pattern images are assembled, a standard structured light 3D reconstruction algorithm can be used to create a single 3D image of the object or space.

The researchers proved that the technique works just as well as synchronized structured light systems. To test the method, the team used a standard light projector. However, the researchers will now work toward developing structured light flash that could be used as an attachment to any camera.

Story via Brown University.

Comments are closed, but trackbacks and pingbacks are open.