Zero-defect software – myth or reality?

For many developers, software development is considered a creative process; the art is in the mastery of how the function and logic is expressed in the application’s lines of code. So what if it doesn’t always work as expected? Vector Software has recently published a whitepaper to explain.

The importance now placed on the role of software in delivering key features and functions, including safety critical functions in so many pieces of equipment and systems, can no longer be left to an artisan approach. Software now needs to be constructed with the precision and quality that can be seen in any modern manufacturing or assembly process.

Many will argue this has already been achieved by applying modern software development principles. Yet, we still see regular news reports of product recalls due to software bugs. When investigated, there are many examples of issues that should have been picked up in quality testing, but because of economic or ‘time-to-market’ pressure, the software didn’t receive sufficient testing before being deployed into the market.

Vector sees lots of dissatisfied customers who are left with a broken product waiting for a fix to become available. As embedded systems commentator Jack Ganssle noted: “80% of embedded systems are delivered late and new code generally has 50 to 100 bugs per thousand lines.”

For the purpose of this article we are going to consider two issues that businesses need to consider – ‘lead time’ and ‘cycle time’, and how businesses can deliver both within a software factory environment.

‘Lead-time’ is what the customer sees and is the complete elapsed time from the time a new product or feature is outlined then approved and scheduled, designed, coded, tested and finally released. This is usually defined by the business leaders and in response to market data about customer needs or competitor activity. It’s sometimes referred to as the systems development life cycle (SDLC).

‘Cycle-time’ is a more singular measure and a subset of the whole manufacturing process and focuses on the time taken to actually create that feature by way of design, code, test and release phases. During the development process there may be a number of different cycles depending how feature rich the application.

In considering this software manufacturing approach ‘cycle-time’ is the critical part of the process which supports an organisation’s ability to deliver a product to its customers. The efficiency or agility of this area of the development process defines how quickly products are developed, assembled and released. Therefore, this part of the process is under the greatest pressure to deliver because without proper testing, quality issues will not be detected, which means rework and retesting and ultimately the slowing down of the speed of delivery as tasks stack up.

The software testing aspect of the process has always been the task that is compromised when it comes to delivering a project on time. Businesses neglect the potential commercial and reputational risk for not completing testing so software can achieve the business’s quality standards.

In many instances, Vector see companies trying to achieve a six week release cycle, but it realistically takes three months to carry out every test for their application.

However, there are many lessons we can learn from the manufacturing business in respect to improving quality which can be applied to create a ‘factory’ type environment to ensure businesses can achieve their desired business velocity and quality.

The idea of software being produced in a factory environment has been applied more recently to outsourced organisations that produce software for specific defined user requirements, using a code assembly process at the best contracted price. Because of the growing strategic importance of software, it is no longer considered viable to outsource as discussed in the whitepaper – Competitive advantage: Reshoring all or part of any offshored testing function will improve software quality.

However, the definition of a software factory states it is ‘the application of manufacturing techniques and principles to software development to mimic the benefits of traditional manufacturing’. The first mentions of a software factory were in the late 1960s and 1970s, originating in Japan with Hitachi, NEC and Fujitsu and then spreading to US companies such as System Development Corporation (SDC) which became part of Unisys in 1991.

We are now going to examine how to build agility and rigour into the software development process, that is, the manufacturing process for producing software. To develop zero-defect software, businesses need look no further, and apply the lessons of the quality revolution, which started in industrial manufacturing in the late 1950s and continues today.

Building the ultimate software factory

To develop a software factory there are four defining principles.

As advocated by Edward Deming and others – Kanban, Lean and Six Sigma are absolutely relevant to where the latest DevOps trend needs to be today and in the future. The whole area covered by the lead time needs to adopt a Kanban board approach for managing the ‘work in progress’ (WIP).

Understanding WIP limits for each stage of the process and monitoring it for bottleneck clusters enables efficient remedial action required to clear the state. We are all aware of elimination of ‘wastes’ defined by the LEAN methodology which is a common approach found in Agile.

However, the pursuit of velocity can lead to a rapid rise in technical debt which leads to longer term increased support costs which needs to be tempered with the satisfaction of the business. It also needs to consider consumer attitude to the speed or response to requests for changes or time to value the software development. Like any system, it has to be scalable, as demand increases over time.

When working with legacy software, the approach needs to balance short term rapid development with a longer term effort to reduce the amount of architectural and technical debt burden.

Finally, whatever the process, there needs to be a ‘how will we test?’ understanding to prove compliance with requirements and quality standards.

Let’s start looking at the start of the lead-time process before any coding has been done, and to think about how we support the ‘how will we test?’ principle. Many developers are familiar with the design approaches offered by OMG’s Unified Model Language (UML), Agile’s user epics and/or stories and model-based design approaches.

Each allows for the description of the anticipated behaviour and functionality of features or applications and in many cases can produce basic code. What needs to happen at this stage is the adoption of the third principle with due consideration given to ‘how will we test?’.

Take the example of an application that requires the user to input a password. What are the conditions that need to be accommodated? Can the user have a mix of numbers, letters and punctuation characters or just numbers and just letters? How many characters?

There are many attributes that need to be tested to ensure the code that is going to be developed meets the requirements laid out in the use case or user story.

Even at this stage we should already be looking at prioritisation, probably based on ‘must have’, ‘should have’, ‘could have’, ‘won’t have’ (MoSCoW) principles to make sure that we are not pushing the WIP limit in the Kanban system we are using to manage our workflow, based on the capacity we have available.

We must continue with the ‘how will we test?’ principle when it comes to writing the application code as this is the most critical stage.

Traditionally, and even more recently, with Agile methodologies, QA and regression testing was left to the end of the development cycle. This isn’t the case in a modern industrial factory.

Leaving testing until the end of the development process creates immense pressure on the business if the development phase of the project is delayed, as it reduces the amount of time available to carry the right level of testing. This usually results in the bare minimum being done to enable release and deployment.

In many cases the lack of comprehensive testing is what has led to the recalls and reworks mentioned earlier. Some have been catastrophic. An example is the loss of NASA’s Mars orbiter because there was incompatibility between measurement systems in use, metric and imperial, which led to the probe’s engine being fired too late and ultimate loss. All because the tests weren’t in place to check the parameters being passed from one system component to another.

Closer to home there was the case of Toyota’s unintended acceleration, which came under extreme scrutiny highlighting a litany of poor quality coding errors, about 350 in total, which were avoidable if the process and system outlined in this whitepaper was in place and followed.

Bugs can come into existence from the moment the first few lines of code are written. The best way to manage this process is to make sure the development team has the ability to test early and regularly.

The concepts of ‘Shift Left testing’ and Test Driven Development (TDD) are growing in importance, ensuring that the test cases for any requirement are in existence before, or as the code is written and used to verify its quality before being uploaded to the code repository. This lends to the practice of keeping code segments small, reducing the debugging effort, and more frequent testing.

By using TDD it can be considered that requirements are individual Kanban cards on a WIP board and thus we have a better overview of progress in the development and testing on the application before it is finalised.

In many instances developers don’t produce new code for every requirement and function but rely on a library of legacy code to integrate. The ‘how will we test?’ principle applies with legacy code and we need to consider this subject in two ways.

Firstly, are there test cases available to ensure we can test the quality of the software as an individual unit before it is integrated? This should be the same way components used in the manufacturing process are inspected before going onto the production line.

Secondly, we need to understand the behaviour and requirements for the piece of legacy code so that we can test that it still performs correctly afterwards in the same way you would check the output of a pump before and after installation to make sure it’s behaving as required. These boundary conditions are also important as the Mars orbiter case proves.

If there isn’t a full set of tests available for a piece of legacy code, they need to be generated either manually or ideally, automatically. The use of an automatic test case generator is an excellent way to ensure that a full complement of unit (characterisation and robustness) tests are available to deliver key code coverage results. This provides an indication that every line of code has been touched by a test case.

In a factory, the individual requirements should be coded. We would then need to assemble into a final application in a similar way to an assembly line process. Therefore, when code is uploaded to the staging repository the complete available set of tests need to run to make sure that the application functions as it was designed. This integration testing process needs to be continuous, ensuring that there is always a fully tested source code base available to produce a product and not be held over until all the components are uploaded or only run periodically.

With the latest applications now involving many millions of lines of code, a full run integration test can take many hours, days or even weeks. As such, the assembly line in the software factory must be able to scale. This minimises the cycle time allowing it to meet the lead time a business expects.

This means removing any bottlenecks from the process. The integration process should use a virtualised Continuous Integration (CI) test engine. The concept of CI focuses around the ability to continually build and test an application every time a change has been, or needs to be made.

Solving the problem of software quality and time-to-market is an ongoing fight, but continuous integration helps developers and engineers address these issues head on. Manual testing works well if the code base is small, but in current times with embedded software laying at the heart of so many products and projects, especially in regulated sectors, the process needs to be automated to cope with the scale of software being tested.

There are certain aspects we need to consider in the construction of the ultimate testing environment:

- Shift left testing, as mentioned, provides tools which allows the developer to test whenever needed.

- Have tools that allow developers the visibility of testing completeness and auto generating test cases for code snippets that are not complete.

- Build a repository that automates the job scheduling of the integration process. Developers need to be able to run integration and functional tests as easily as they can run unit tests.

- Parallelise and scale the test architecture to achieve a faster build time. This should include the ability to carry out tests and simulations of all hardware environments where the software will be deployed.

- Overlay intelligence that understands the smallest number of tests to be re-run by a change to the source code.

This scalable intelligent architecture will enable a business to benefit from being able to take a competitive advantage as they can easily meet launch dates and react quickly to market changes.

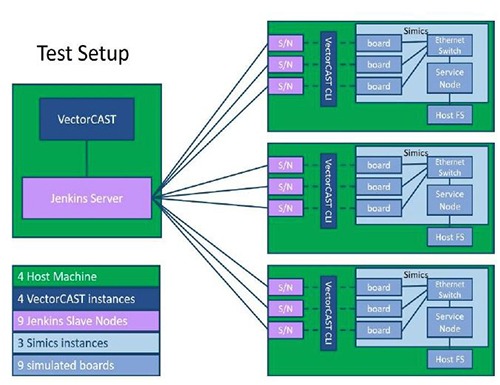

A scalable architecture can be built from readily available products – Jenkins is a server-based, open source continuous integration tool written in Java. It can take hours to perform an incremental build of an application, and tests can take weeks to run – Jenkins enables continuous testing each time a source code change is made quickly.

Organising the tests is just one part of the process. Using a parallel testing infrastructure is also crucial to the time saving element of CI. Using Jenkins as the CI server, the test targets need to be scrutinised, and a popular choice is the use of hardware simulators such as GHS Sim, trace32, Simics and Vector Informatik’s Virtual ECU simulator.

Using Jenkins and hardware simulators together, engineers can select which environments to test and discover which cases need to be rebuilt and run based on the source code changes.

Engineers can set up different configurations of the same board to run comparable tests which allows for complete code coverage testing. This is also forms the basis for an ideal test engine for managing different variations of the application depending the customer requirements.

In a modern agile software factory environment, the production lines need to produce slightly different variants of the same basic product in the same way other manufacturing lines do without having to experience long delays while the line is changed.

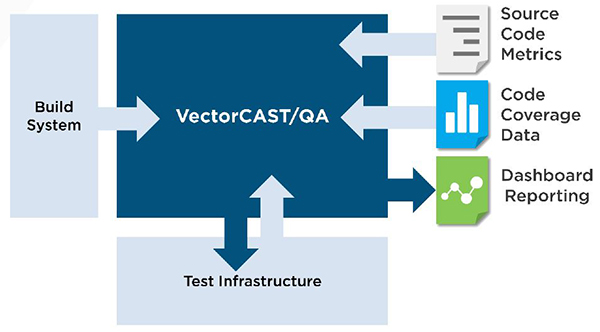

We’ve already discussed code coverage and its importance to knowing that every line code has been exercised. However, code coverage isn’t the whole picture, there will be coding standards, especially if the application is going to be used in a regulated safety critical environment, that needs to be met.

Therefore, similar to the final inspection process on a manufacturing production line, there needs to be the equivalent process in the software factory.

The QA process needs to integrate with the assembly system to silently collect key metrics such as code complexity, frequency of code changes, test case status, and code coverage data. It needs to provide development and QA engineers a single point of control for test activities, as well as a wealth of data that can be used to make quality improvement decisions.

No changes to the WIP workflow or tools are required. As normal system testing activities take place, a data repository is constructed which becomes an oracle to answer questions such as:

- How much testing has been done?

- What testing remains to be done?

- Are we ready to release?

- Where should I invest more testing effort?

Conclusion

At the outset, we wanted to establish if a zero-defect software factory was a reality. Research indicates that while a total zero-defect software development is a high ideal, a more realistic viewpoint is that a near zero-defect software factory is feasible.

It would take the combination of the lessons learnt from the likes of Edward Deming and apply them, along with today’s established software development techniques and methodologies.

However, there is one caveat. It is around how a business understands the risk factors of not taking testing to heart and making it a fundamental practice in delivering the highest quality software possible. This can lead to customer dissatisfaction and ultimately the value of company.