How Magnets Could Lead to More Accurate and Efficient Artificial Intelligence

By Dawn Allcot

Purdue University researchers have developed a process that uses magnetics with brain-like networks to program and teach AI-powered devices such as personal robots, self-driving cars, and drones to better generalize about different objects.

With rapid advances in self-driving cars, marketing and advertising, human resources, robotics, and a host of other fields, artificial intelligence has already changed the way human beings approach specific tasks.

But the new AI technology, built around stochastic neural networks, behaves even more like a human brain, computing through a connection of neurons and synapses. The new technology is more energy efficient and requires less computer memory to execute processes. The computer brain not only stores information, but also can generalize accurately about objects, and then make inferences to better distinguish between objects. These skills have multiple applications in automated vehicles, drones, and personal or industrial robots.

How It Works

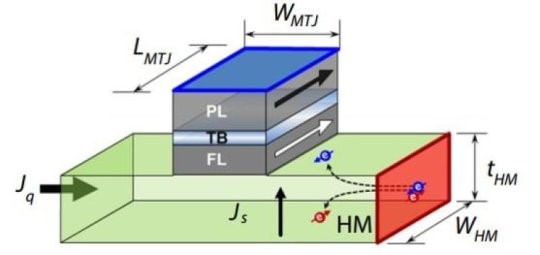

The switching dynamics of a nano-magnet are similar to the electrical dynamics of neurons. Magnetic tunnel junction devices show switching behavior, which is stochastic in nature.

The stochastic switching behavior is representative of a sigmoid switching behavior of a neuron. Such magnetic tunnel junctions can be also used to store synaptic weights.

The Purdue group proposed a new stochastic training algorithm for synapses using spike timing dependent plasticity (STDP), termed Stochastic-STDP, which has been experimentally observed in the rat’s hippocampus. The inherent stochastic behavior of the magnet was used to switch the magnetization states stochastically based on the proposed algorithm for learning different object representations.

The trained synaptic weights, encoded deterministically in the magnetization state of the nano-magnets, are then used during inference. Advantageously, use of high-energy barrier magnets (30-40KT where K is the Boltzmann constant and T is the operating temperature) not only allows compact stochastic primitives, but also enables the same device to be used as a stable memory element meeting the data retention requirement. However, the barrier height of the nano-magnets used to perform sigmoid-like neuronal computations can be lowered to 20KT for higher energy efficiency.

The Future of AI?

Roy said the brain-like networks also have other uses in solving difficult problems as well, including combinatorial optimization problems such as the traveling salesman problem and graph coloring. The proposed stochastic devices can act as “natural annealer,” helping the algorithms move out of local minimas.

Roy has worked with the Purdue Research Foundation Office of Technology Commercialization on patented technologies that are providing the basis for some of the research at C-BRIC. They are looking for partners to license the technology, which was presented during the annual German Physical Sciences Conference earlier this month in Germany and also appeared in the journal Frontiers in Neuroscience

Story via Purdue University.