As industrial systems become more complex and interconnected, there’s more demand for real-time fault detection and predictive maintenance. But the standard approach to AI-based monitoring—relying on cloud-connected systems for inference and analytics—introduces its own set of complications: latency, bandwidth constraints, data privacy concerns, and high infrastructure costs.

What if devices could learn and predict failures entirely on their own?

That’s the promise of on-device learning and inference—AI that operates independently, without needing constant communication with the cloud. Until recently, this concept has been more of a theoretical goal due to cost-sensitive or space-constrained industrial and consumer environments.

But that’s starting to change.

The Case for Local AI

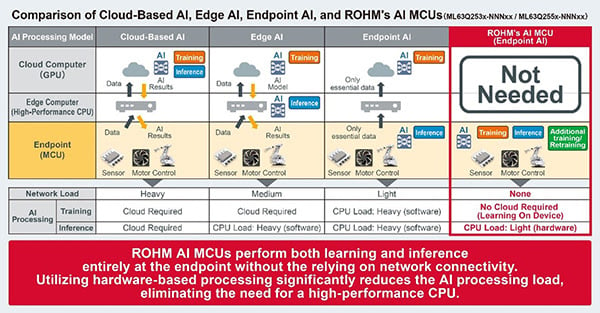

Traditional AI processing typically falls into three buckets:

-

Cloud AI: Both training and inference occur remotely. Powerful but slow, dependent on stable internet connectivity.

-

Edge AI: Inference happens closer to the device (e.g., on a gateway or local server), but training still takes place in the cloud.

-

Endpoint AI: Inference is performed on the device itself, but models must still be trained elsewhere.

Each model introduces trade-offs in latency, power consumption, scalability, and cost. For applications like predictive maintenance in industrial motors, residential appliances, or battery systems, those trade-offs matter. Systems must catch anomalies in real time, adapt to device-specific wear patterns, and maintain security—all without escalating operational complexity.

That’s where fully independent, on-device AI—capable of both learning and inference—enters the picture.

ROHM’s Breakthrough AI MCU

A key breakthrough in this space comes from ROHM Semiconductor, which recently announced the industry’s first microcontroller units (MCUs) capable of performing AI-based learning and inference entirely on-device—without relying on any network connection.

The new ML63Q253x-NNNxx / ML63Q255x-NNNxx series MCUs are designed to support real-time fault prediction and anomaly detection across industrial equipment, consumer appliances, and more. They integrate a simple 3-layer neural network using ROHM’s proprietary Solist-AI™ solution and leverage the AxlCORE-ODL AI accelerator to achieve AI processing speeds up to 1,000 times faster than conventional software-based MCUs (at 12MHz).

This enables them to:

-

Learn system behavior in the field (e.g., vibration patterns or motor currents)

-

Identify deviations from normal operation in real-time

-

Adapt to unit-specific variations without retraining in the cloud

With a low power footprint (~40mW) and built-in motor control, CAN FD, and A/D conversion functions, these MCUs are ideal for retrofitting into existing infrastructure—particularly in environments where network access is limited or undesirable.

Why This Matters for Predictive Maintenance

Consider a factory-floor motor system. Traditionally, data is collected from sensors and transmitted to a central server or cloud environment for analysis. In the case of a failure, latency in detection—or network disruption—can lead to costly downtime.

With ROHM’s MCU deployed locally:

-

The system can learn what “normal” motor operation looks like directly at the device level

-

It can detect early signs of wear, load imbalance, or bearing damage

-

It can issue alerts or adjust operation autonomously, without needing cloud feedback

This principle can extend to a variety of use cases:

-

FA sensors: Light, flow, and acoustic signals can be monitored for self-degradation and environmental shifts

-

Home appliances: Predict when filters, motors, or other components are likely to fail

-

Industrial robots: Optimize maintenance schedules by detecting mechanical anomalies early using endpoint data

Development Ecosystem and Tools

To accelerate adoption, ROHM offers a comprehensive development ecosystem:

-

Solist-AI™ Sim: A PC-based tool for simulating AI performance pre-deployment

-

Evaluation boards: Available for rapid prototyping via distributors like DigiKey, Mouser, and Farnell

-

LEXIDE-Ω IDE and real-time visualization tools to assess AI inference effectiveness

This ecosystem makes it easier for developers to integrate standalone AI into existing control loops, using standard tools and programming workflows.

What’s Next?

AI is moving from the cloud into the real world—literally, into the machines, tools, and systems we rely on daily. ROHM’s AI MCUs are an early sign of this shift, proving that AI doesn’t always require big servers, big data, or big bandwidth.

Instead, the future of predictive maintenance may live in the smallest chip inside your motor—or fridge.