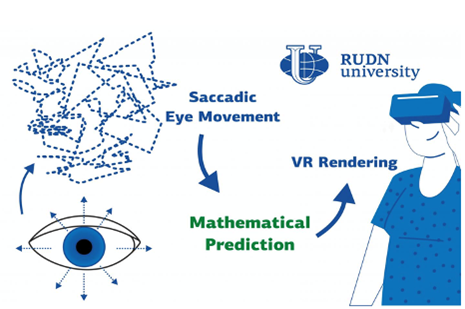

Eye movement is key to virtual and amplified reality technologies (VR/AR). A team from MSU together with a RUDN University professor developed a mathematical model to accurately predict the next gaze fixation point, reducing inaccuracy caused by blinking. The model will make VR/AR systems more realistic and sensitive.

A person’s gaze focuses on the foveated region, putting everything else in peripheral vision. A computer must render images in this region with high detail, which improves computational performance and eliminates issues caused by the gap between limited graphic processors and increasing display resolution. Foveated rendering technology is limited in speed and by the accuracy of the next gaze fixation point prediction based on the complexity of the human eye. The researchers developed a mathematical modeling method that helps calculate the next gaze fixation points in advance.

The eye tracker based on the mathematical model was able to detect minor eye movements (3.4 minutes, which is equal to 0.05 degrees), and the inaccuracy amounted to 6.7 minutes (0.11 degrees). The team eliminated calculation errors caused by blinking: a filter included in the model reduced the inaccuracy 10 times. Applications include VR modeling, video games, and in medicine for surgeries and vision disorders diagnostics.

The results of the study were published in the SID Symposium Digest of Technical Papers.