Watch Out Alexa And Siri!

Often you can blame stupidity for hacking, but now you can blame tech companies for a new vulnerability.

Researchers from China’s Zheijiang University found a way to attack Siri, Alexa and other voice assistants by feeding them commands in ultrasonic frequencies.

Those are too high for humans to hear, but they’re perfectly audible to the microphones on your devices.

With the technique, researchers could get the AI assistants to open malicious websites and even your door if you had a smart lock connected.

DolphinAttack

The relatively simple technique is called DolphinAttack. Researchers first translated human voice commands into ultrasonic frequencies (over 20,000hz). They then simply played them back from a regular smartphone equipped with an amplifier, ultrasonic transducer and battery – less than $3 worth of parts.

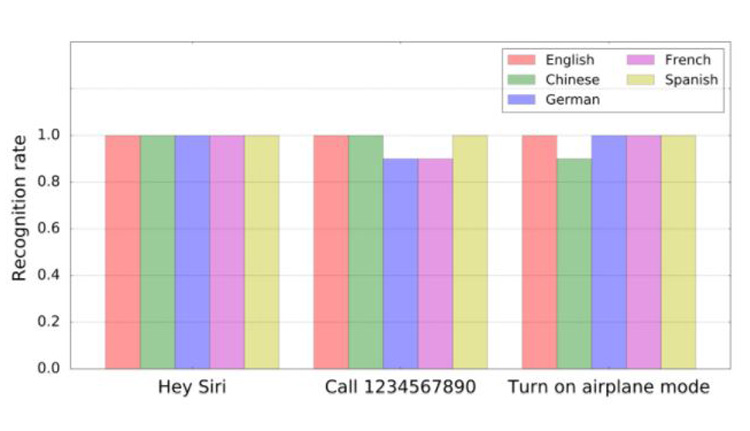

What makes the attack scary is the fact that it works on just about anything: Siri, Google Assistant, Samsung S Voice and Alexa, on devices like smartphones, iPads, MacBooks, Amazon Echo and even an Audi Q3 – 16 devices and seven system in total.

What’s worse, “the inaudible voice commands can be correctly interpreted by the SR (speech recognition) systems on all the tested hardware.” Suffice to say, it works even if the attacker has no device access and the owner has taken the necessary security precautions.

The group successfully tested commands like “Call 123-456-7890,” “open Dolphinattack.com” and “Open the back door,” leaving owners vulnerable to data, or worse, real life attacks.

It was even able to change the navigation on an Audi Q3.

There’s one bit of good news: At this point, the device has a range of five or six feet, so it’s of limited use unless researchers can increase the power.

You’d also have to have your assistant activated, in the case of Siri or Google Assistant, and if an ultrasonic commanded activated, those systems would make a tone or reply back, alerting the user.

So, for a hack to work, you’d have to have your assistant unlocked and not really be paying attention, a fairly unlikely scenario.

However, if you’re in a public place with your phone unlocked, a nearby attacker could possibly gain access.

Device makers could stop this simply by programming it to ignore commands at 20KHz or other frequencies that humans can’t possibly speak in.

However, the team found that every major AI assistant-enabled device currently accepts such commands without missing a beat.

As to why the microphones even work at such frequencies (up to 42,000Hz), filtering them out might lower a system’s “comprehension score,” an industrial designer told Fast Co.

Some devices, like the Chromecast, also use it for ultrasonic device pairing.

For now, the researchers recommend that device makers either modify microphones so that they don’t accept signals above 20Khz, or simply cancel any voice commands at inaudible frequencies. In the meantime, if you have a dog and he starts acting weird for no reason, we wouldn’t blame you for getting paranoid.

Comments are closed, but trackbacks and pingbacks are open.