In the not-too-distant future (likely not next Sunday, AD), Apple’s Siri will use motion detection to read lips, eliminating the use of a microphone. It seems that it’s more difficult for Siri to accurately understand spoken commands, so allowing Siri to detect both mouth and head movement is expected to increase accuracy.

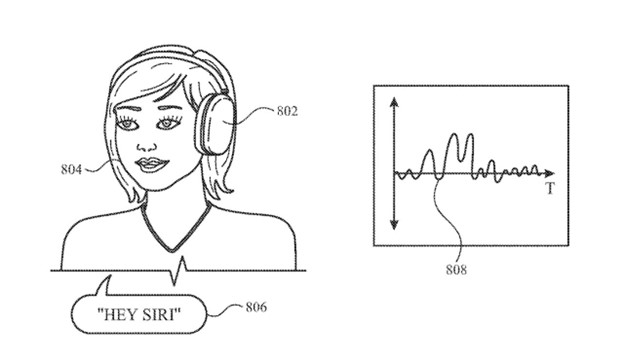

Apple filed a patent application called “Keyword Detection Using Motion Sensing” in January this year, and the software’s publish date was August 3. The patent application details how mouth movements can be compared against previous data as Siri or a device attempts to find a match.

Apple, however, won’t ditch microphones anytime soon. Instead, motion detection could enable switching off the microphones that constantly listen for “Siri” or “Hey, Sir,” cutting down on the power and processing capacity necessary for voice control. Since a user’s mouth, face, head, and neck move and vibrate, accelerometer and gyroscope sensors can detect those motions using little power compared to audio sensors such as microphones.

Saying “Siri” should turn on the microphone to listen for any further instruction

s a user might add. We expect that iPhones, AirPods, and Apple Vision Pro will use the new methodology.