A group of UK scientists from the University of Sussex have come one step closer to transforming the human body into an interactive touchscreen.

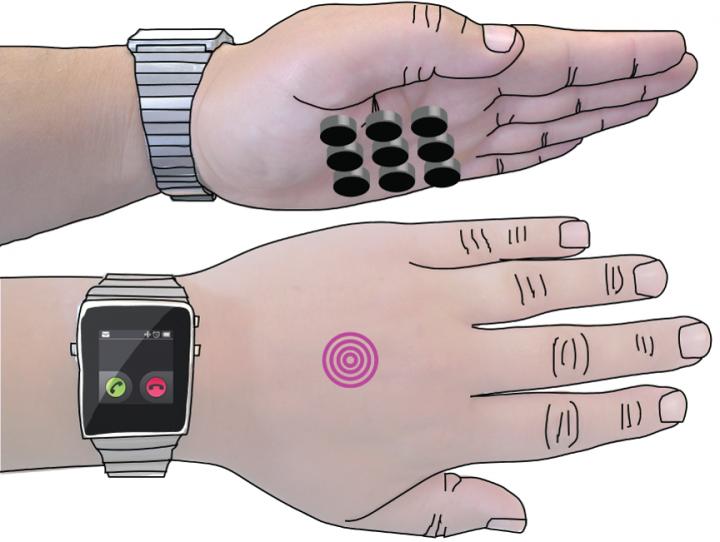

The researchers successfully created tactile sensations on a human’s palm using ultrasound sent through the hand, marking the first time users could actually feel what they were doing while interacting with a display projected onto their hand.

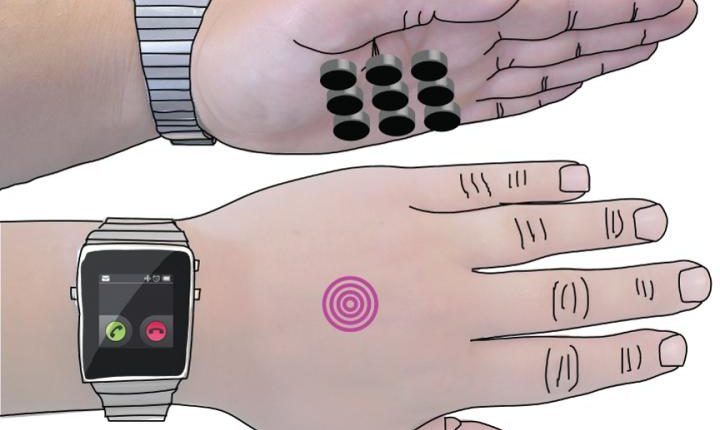

This development solves one of the biggest challenges technology companies have faced when tying to turn the human body, particularly the hand, into a display extension for the next generation of smartwatches and other smart devices. Any current models of the technology rely on vibrations or pins, which need to be in contact with the palm to work. This often disrupts the display and does not pose as a feasible option.

Instead, the Sussex researchers’ new innovation, called SkinHaptics, sends sensations to the palm from the opposite side of the hand which leaves the palm free to display the screen.

“If you imagine you are on your bike and want to change the volume control on your smartwatch, the interaction space on the watch is very small. So companies are looking at how to extend this space to the hand of the user,” said Sriram Subramanian, professor at the University of Sussex, who leads the research. “What we offer people is the ability to feel their actions when they are interacting with the hand.”

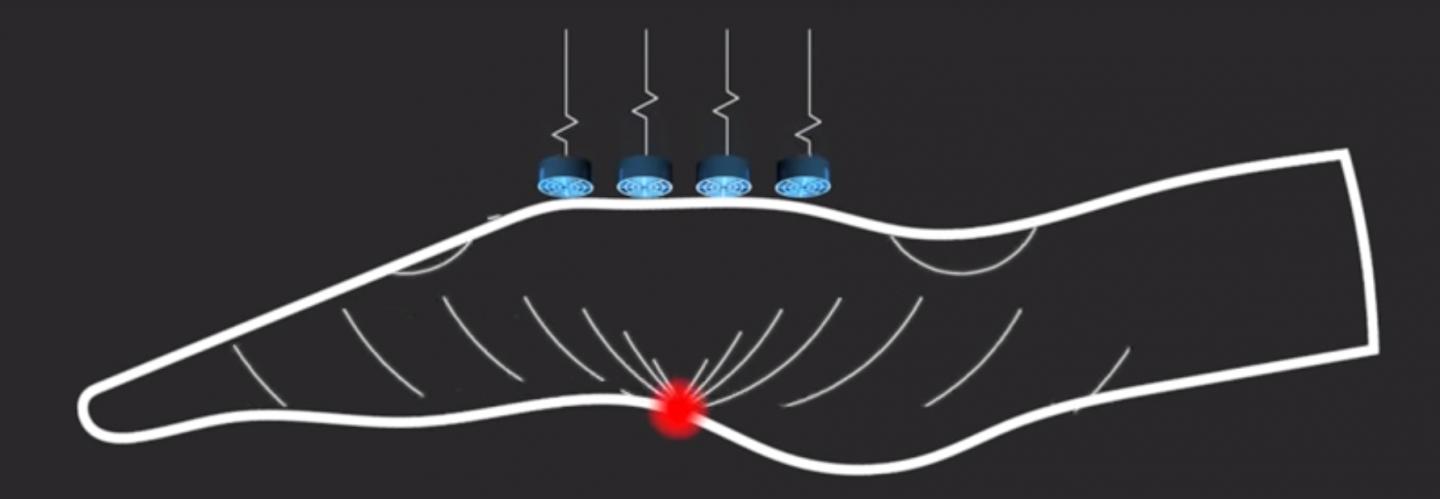

The SkinHaptics device employs time-reversal processing which sends ultrasound waves through the hand. This technique can be compares to ripples in water, but in reverse. Instead, the waves become more targeted as they travel through the hand and end at a precise point on the palm.

The team’s idea for the device came from a growing field of technology called haptics, which is the science of applying touch sensation and control to interaction with computers and technology.

“Wearables are already big business and will only get bigger. But as we wear technology more, it gets smaller and we look at it less, and therefore multi-sensory capabilities become much more important,” said Subramanian.

Comments are closed, but trackbacks and pingbacks are open.