Quantum AI and quantum classifier vulnerability discovered

Artificial intelligence and quantum computing are making rapid progress. The fusion of AI and quantum physics is spawning a new field–quantum AI. Before it can be harnessed, however, there’s a small problem of the vulnerability of quantum classifiers.

When considering classical machine learning, classifier vulnerability has long been researched. Recent studies are now exposing the potential vulnerability of quantum classifiers from both theoretical analysis and numerical simulations.

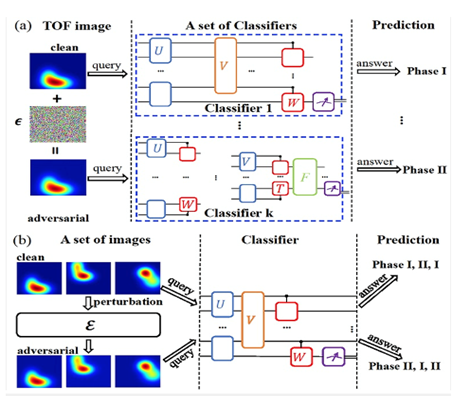

In a new research article published in the Beijing-based National Science Review, for the first time, researchers from IIIS, Tsinghua University, China studied the universality properties of adversarial examples and perturbations for quantum classifiers. The authors put proved that: (i) there exists universal adversarial examples that could fool different quantum classifiers and (ii) there exist universal adversarial perturbations, which when added to different legitimate input samples make them become adversarial examples for a given quantum classifier.

The authors conclude that the threshold strength for a perturbation to deliver an adversarial attack decreases exponentially as the number of qubits increases. The paper extended this conclusion to the case of multiple quantum classifiers, and rigorously proved that for a set of k quantum classifiers, an logarithmic k increase of the perturbation strength is enough to ensure a moderate universal adversarial risk.

They also proved that when a universal adversarial perturbation is added to different legitimate samples, the misclassification rate of a given quantum classifier increases as the dimension of data space increases. Furthermore, the rate will approach 100% when the dimension of data samples is infinitely large.

The results reveal a crucial universality aspect of adversarial attacks for quantum machine learning systems.

Original Release: Eureka Alert