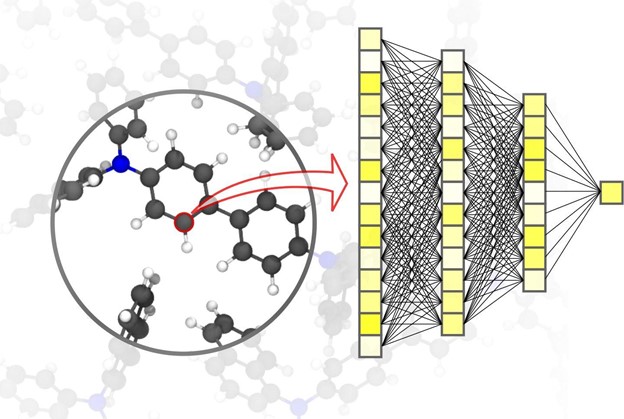

Robotic arms have been around for quite a long time. The manipulation that they perform is a common action as well as still a challenging problem. In order to perform in-hand manipulation on a sophisticated level, Professor Aaron Dollar and a team of researchers designed a robotic system that maps out how it interacts with its environment and, more important, to adapt and update its own internal model. The researchers created algorithms that would allow the system to self-identify necessary parameters through exploratory hand-object interactions.

Results of the research were recently described and published in Science Robotics (“Manipulation for self-Identification, and self-Identification for better manipulation”). The research team had the hand perform several tasks, including cup-stacking, writing with a pen, and grasping various objects.

Robotic manipulation systems are built with model parameters that are either known or obtained directly from sensing modalities, including vision sensors, tactile sensors, force/torque sensors, and proprioceptive sensors, etc. However, the challenge is that there are many model parameters, lacking prior knowledge or limited by the available sensors and physical uncertainties, not directly available to the robot in many scenarios.

The proposed concept of self-identification will shift the traditional paradigm of robotic manipulation from “sense, plan, act” to “act, sense, plan,” which is more naturally seen in almost every manipulation task that humans perform.

Original Release: Yale University